Logistic Regression is a statistical method and a fundamental classification algorithm in machine learning used for predicting the probability of a given instance belonging to a particular category in a binary or multi-class classification problem.

Basic Idea: While linear regression predicts a continuous outcome, logistic regression predicts a probability that the given instance belongs to a particular category.

Mathematical Representation: For binary classification (e.g., spam or not spam, sick or healthy), the formula is:

P(Y=1)=1+e−(β0+β1x1+β2x2+…+βnxn)1

Where:

- P(Y=1) is the probability of the instance belonging to class 1.

- x1,x2,…,xn are the predictor variables.

- β0,β1,…,βn are the coefficients.

- e is the base of natural logarithms.

This equation maps any input to a value between 0 and 1, making it suitable for a probability estimation. The transformation from our input variables to our output is performed by the logistic function (also called the sigmoid function), which is an S-shaped curve.

Decision Boundary: To classify this probability into one of the two classes, a threshold is selected, commonly 0.5. If P(Y=1) is greater than the threshold, the instance is classified as class 1, otherwise as class 0.

Learning the Coefficients: The goal in logistic regression is to find the best coefficients that maximize the likelihood of producing our given data. This is often done using techniques like Maximum Likelihood Estimation (MLE).

Assumptions:

- Binary Logistic Regression: The outcome must be binary.

- Linearity: Assumes a linear decision boundary.

- Independence: Observations are independent.

- No multicollinearity: Predictor variables shouldn’t be highly correlated with each other.

- Large Sample Size: Requires a sufficiently large sample size to ensure meaningful results.

Extensions:

- Multinomial Logistic Regression: Used when the outcome has more than two categories.

- Ordinal Logistic Regression: Used when the outcome has ordered categories.

Applications: Logistic regression is used widely in various domains:

- Medicine: Diagnosing diseases (yes or no).

- Finance: Approving credit cards (approved or denied).

- Marketing: Predicting customer churn (stay or leave).

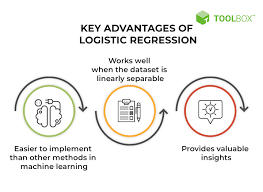

Strengths:

- Outputs have a probabilistic interpretation.

- Can be regularized to avoid overfitting.

- Simple and interpretable.

Limitations:

- Assumes linearity between the predictors and the log odds.

- Not as powerful as other classification algorithms for complex datasets or non-linear decision boundaries.

In summary, logistic regression is a foundational algorithm in machine learning and AI for classification problems. Its strength lies in its simplicity and interpretability, but for more complex, high-dimensional data, or non-linear boundaries, more advanced methods may be necessary.